George Jiayuan Gao

gegao@seas.upenn.edu

I am a second year Robotics Master’s student in the General Robotics, Automation, Sensing & Perception (GRASP) Laboratory at the University of Pennsylvania, advised by Prof. Nadia Figueroa and Prof. Dinesh Jayaraman.

Previously, I completed my undergraduate studies in Mathematics and Computer Science at Washington University in St. Louis, where I worked with Prof. Yevgeniy Vorobeychik.

My research broadly focuses on the generalization of learning-based robot policies 🦾.

Link to my CV (last update: June 2025).

News

| Jun 15, 2025 | Our paper “Out-of-Distribution Recovery with Object-Centric Keypoint Inverse Policy For Visuomotor Imitation Learning” was accepted at IROS 2025! |

|---|---|

| Jun 08, 2025 | VLMgineer has been accepted for Oral Spotlight |

| May 14, 2025 | Honored to have received Penn Engineering’s graduate Outstanding Research Award this year! |

| Nov 09, 2024 | Check out our Spotlight Presentation |

Publications

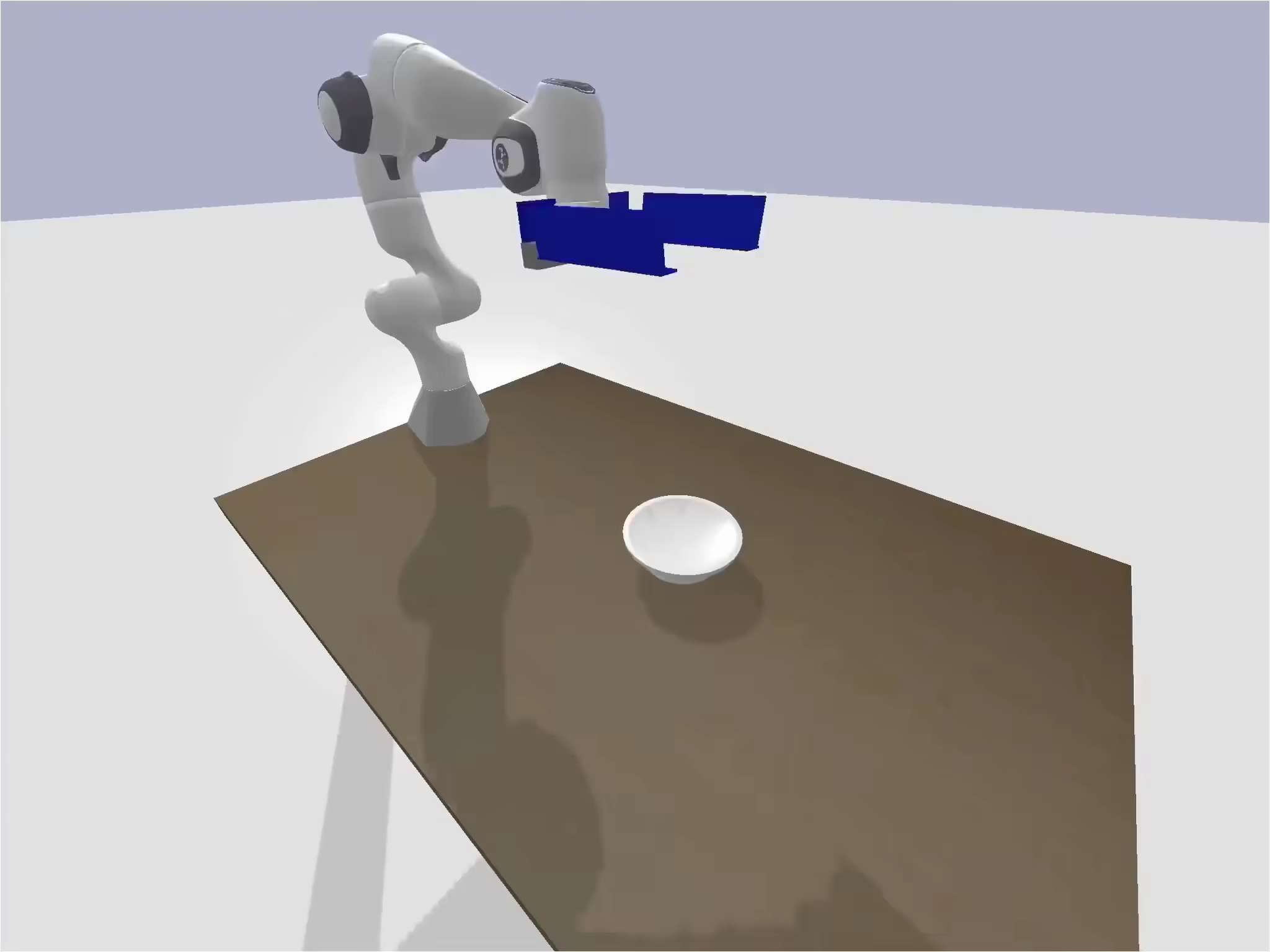

- VLMgineer: Vision Language Models as Robotic Toolsmiths

Spotlight at RSS Workshop on Robot Hardware-Aware Intelligence, 2025.

Spotlight at RSS Workshop on Robot Hardware-Aware Intelligence, 2025. -

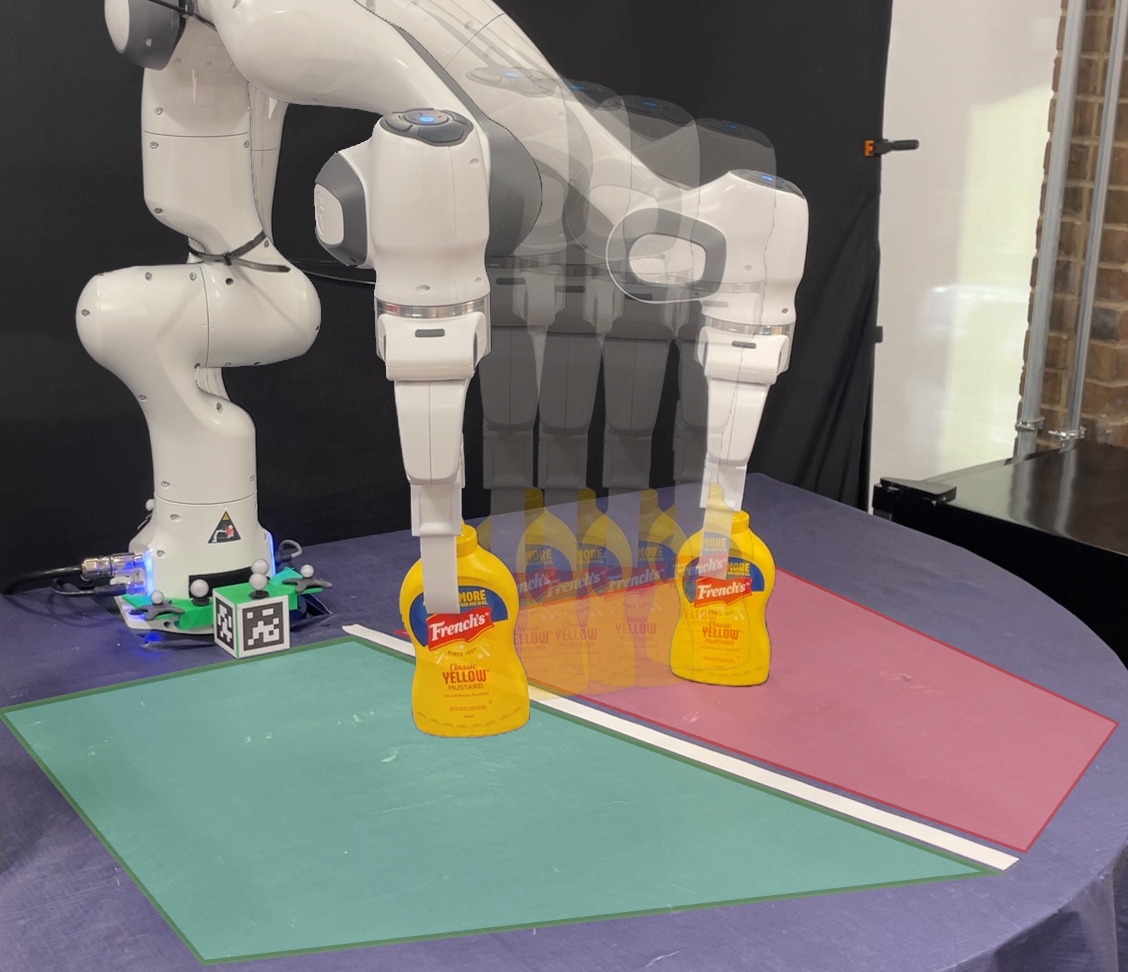

Out-of-Distribution Recovery with Object-Centric Keypoint Inverse Policy For Visuomotor Imitation LearningInternational Conference on Intelligent Robots and Systems (IROS), 2025.

Out-of-Distribution Recovery with Object-Centric Keypoint Inverse Policy For Visuomotor Imitation LearningInternational Conference on Intelligent Robots and Systems (IROS), 2025.

Selected Projects

-

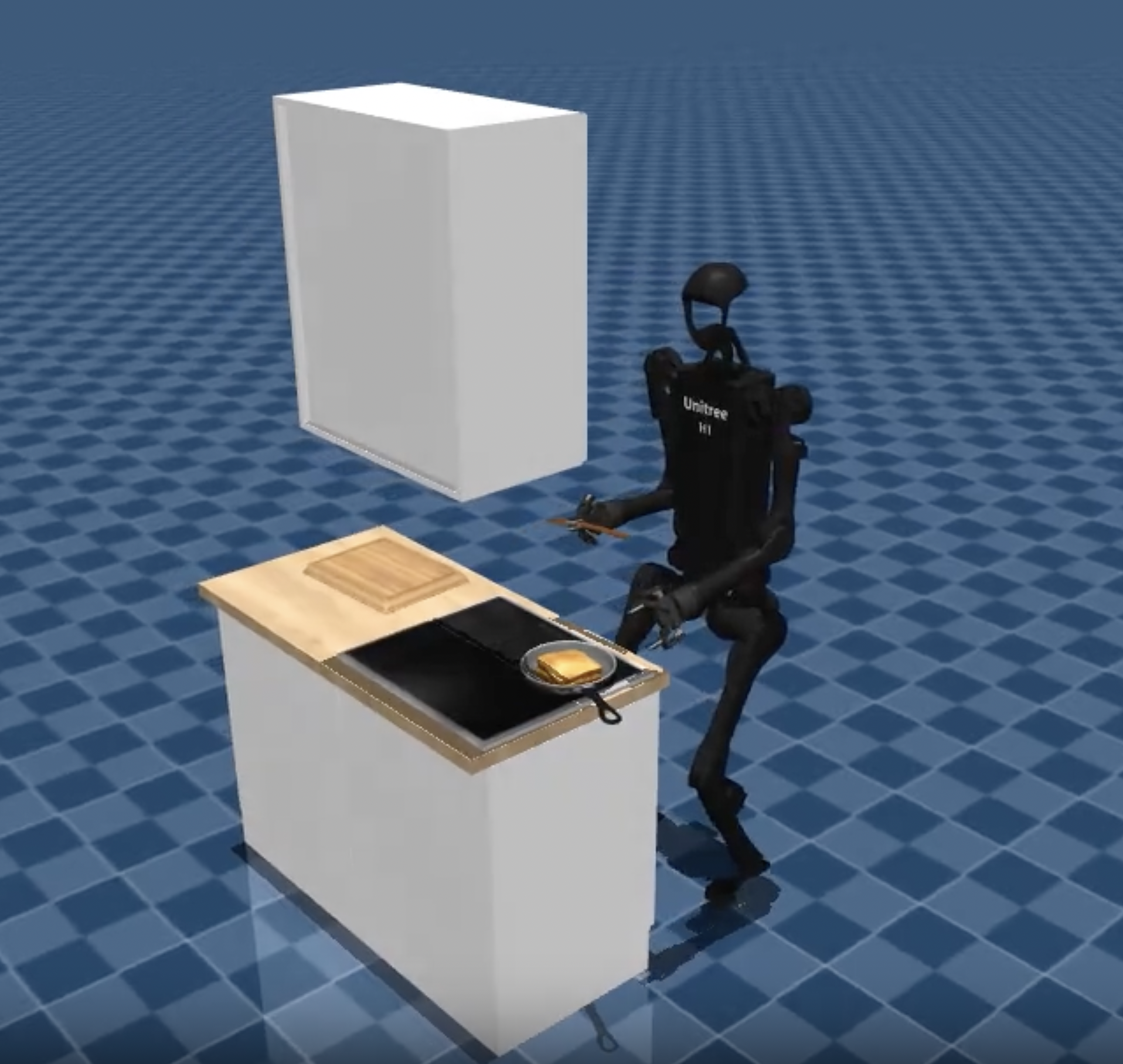

(On-Going) Eureka for Manipulation: Real-World Dexterous Agent via Large-Scale Reinforcement LearningTraining a skilled manipulation agent with RL in simulation that can zero-shot transfer to the real world is hard. The question is: does this get any easier when we add LLM in the loop and utilize ginormous levels of computing power, such as hundreds of Nvidia’s latest generation of data-center GPUs?, 2025.

(On-Going) Eureka for Manipulation: Real-World Dexterous Agent via Large-Scale Reinforcement LearningTraining a skilled manipulation agent with RL in simulation that can zero-shot transfer to the real world is hard. The question is: does this get any easier when we add LLM in the loop and utilize ginormous levels of computing power, such as hundreds of Nvidia’s latest generation of data-center GPUs?, 2025. -

(On-Going) Stable Visuomotor Policy from a Single Demo: Elastic Action Synthesis Data AugmentationWe propose a methodology that uses our in-house Elastic-Motion-Policy, enabling the training of visuomotor policies with full spatial generalization from only a single demonstration, 2024.

(On-Going) Stable Visuomotor Policy from a Single Demo: Elastic Action Synthesis Data AugmentationWe propose a methodology that uses our in-house Elastic-Motion-Policy, enabling the training of visuomotor policies with full spatial generalization from only a single demonstration, 2024. -

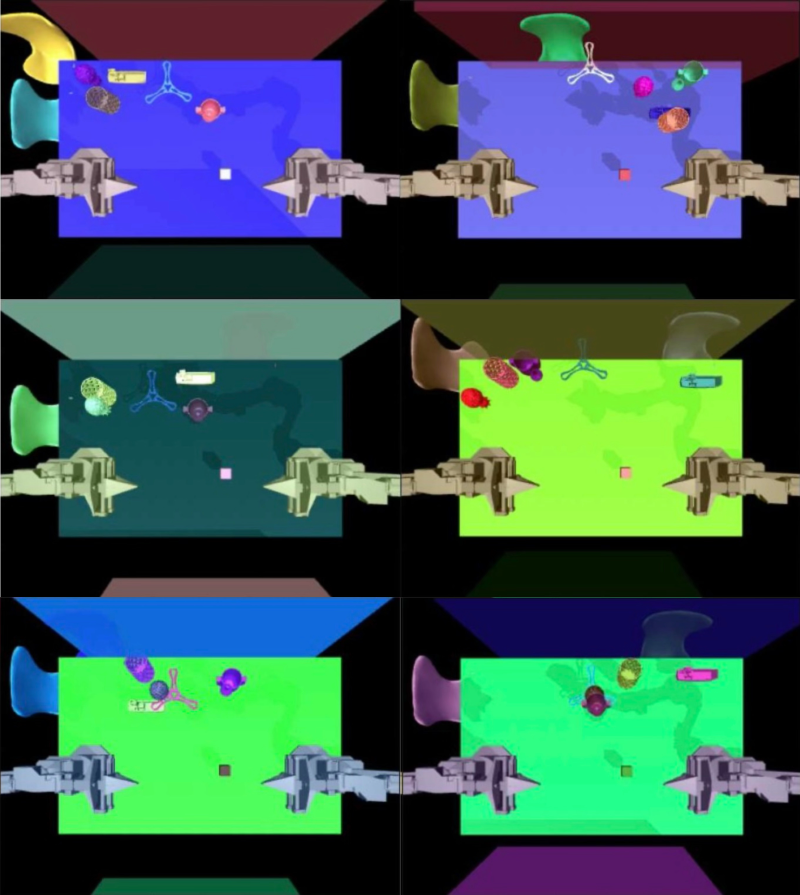

Novel Environment Transfer of Visuomotor Policy Via Object-Centric Domain-RandomizationProposed GDN-ACT, a novel, scalable approach that enables zero-shot generalization of visuomotor policies across unseen environments, using a pre-trained state-space mapping for object localization, May 2025.

Novel Environment Transfer of Visuomotor Policy Via Object-Centric Domain-RandomizationProposed GDN-ACT, a novel, scalable approach that enables zero-shot generalization of visuomotor policies across unseen environments, using a pre-trained state-space mapping for object localization, May 2025. -

Modular Gait Optimization: From Unit Moves to Multi-Step Trajectory in Bipedal SystemsProposed the Gait Modularization and Optimization Technique (GMOT), which leverages modular unit gaits as initialization for Hybrid Direct Collocation (HDC), reducing sensitivity to constraints and enhancing computational stability across various gaits, including walking, running, and hopping, Dec 2023.

Modular Gait Optimization: From Unit Moves to Multi-Step Trajectory in Bipedal SystemsProposed the Gait Modularization and Optimization Technique (GMOT), which leverages modular unit gaits as initialization for Hybrid Direct Collocation (HDC), reducing sensitivity to constraints and enhancing computational stability across various gaits, including walking, running, and hopping, Dec 2023. -

Miniature City Autonomous Driving Platform Development with Real-Time Vision-Based Lane-FollowingDeveloped the drive stack for Washington University’s inaugural miniature city autonomous driving platform by developing the vision-based lane-following pipeline, May 2023.

Miniature City Autonomous Driving Platform Development with Real-Time Vision-Based Lane-FollowingDeveloped the drive stack for Washington University’s inaugural miniature city autonomous driving platform by developing the vision-based lane-following pipeline, May 2023.